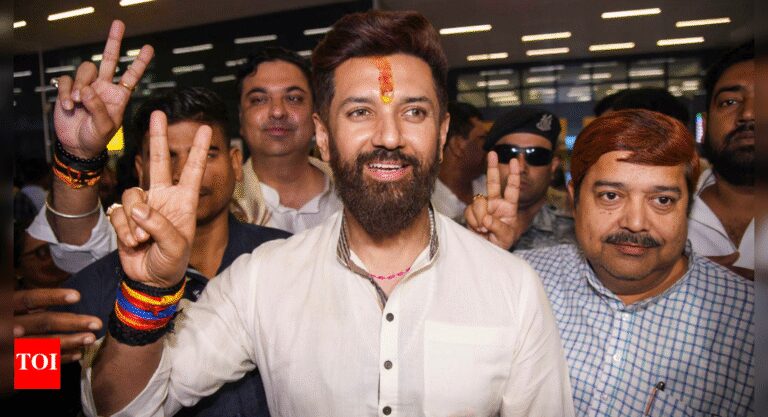

In a world where seeing is no longer believing, India is facing a new digital menace: AI-generated deepfakes. These hyper-realistic videos and audio clips don’t just distort reality. They manipulate public perception, spread misinformation and undermine trust in democracy itself. With 85.5% of Indian households owning at least one smartphone, mobile phones have become India’s bank, classroom and television, making citizens more vulnerable to digital deception. During the 2024 elections, for instance, satirical deepfakes became a campaign tool of sorts. Apart from resurrecting dead leaders, it featured clips such as one that featured PM Narendra Modi dancing the garba with women and Kamal Nath dissing a popular welfare scheme. Though investigations and arrests followed, such videos blur the line between fact and fiction, risking the distortion of voter opinion and the integrity of the democratic process. Cybersecurity experts warn AI is also becoming a key tool in cybercrime: 80% phishing campaigns in 2024 involved AI, with deepfakes exploited for scams. “When you can fake images, audio, and video, how do you know if a real video is real? Suddenly, everything is in question,” said Hany Farid, professor at University of California, Berkeley, in a TOI interview last year about the worrying rise in AI misuse.

Armour up with TOI to fight deepening threat of deepfakes The deepfake threat extends beyond politics. Recently, finance minister Nirmala Sitharaman highlighted the misuse of AI to clone voices and produce fake videos impersonating her, warning of potential financial scams and the erosion of public trust. “I have seen several deepfake videos of myself being circulated online, manipulated to mislead citizens and distort facts,” Sitharaman said at an event in Mumbai. “Criminals are using AI to mimic voices, clone identities, and create lifelike videos that can manipulate people,” she added. Given that over 97% of India’s urban youth aged 15 to 29 owns a smartphone, the impact of such misinformation on a generation is worrying, say observers. “Deepfakes began in 2016. Back then, it was a joke. But the technology has only gotten better. Earlier, there were photoshopped face swaps. Now there are lipsync fakes,” said Prof. Farid, warning that the tech has become more refined, raising the risk of harassment of women through the use of non-consensual sexual imagery. In Nov 2023, actress Rashmika Mandanna became a victim of a viral deepfake video that superimposed her face on to compromising content, sparking widespread outrage. “The very term ‘deepfake’ comes from a Reddit user who in the early days of this technology used it to create porn,” said Farid, calling the new term ‘Generative AI’ clever “rebranding” by the male-dominated tech industry. As AI evolves, India must strengthen legal frameworks, enhance digital literacy, and bring together minds across sectors to ensure safety, say experts. “We need to label everything as real or not real,” said Farid. “We need real disclosures,” he added. To combat the deepening threat of deep fakes, TOI has already started armouring up. Watch out for our ‘Don’t Get Scammed’ stories to stay informed and aware. After all, as Farid puts it, “if you don’t know what’s real, how will you know what’s false”?